Introducing the SaaS Capital AI Assessment Framework

February 17, 2026

The wide availability beginning in 2025 of Artificial Intelligence (AI), and in particular the generative models known as LLMs, has given rise to fevered speculation as to the potential effects on the Business-to-Business Software-as-a-Service (B2B SaaS) market. SaaS is not dying, and a single generalist AI model will not replace all software. However, like other transformational advances in software (such as the move from mainframe to PC; the proliferation of Open Source; the move to hosted single- and multi-tenant app delivery; and the ubiquity of smartphones for app consumption), there will be a reshuffling of the deck. Competitive forces will be much different in 2027 than they were in 2023. Our aim in developing our AI Framework was to guide our thinking about competitive risks to existing B2B SaaS companies in a systematic, quantitative, and actionable way. Our background positions us uniquely to do so.

Our Fundamental Belief About B2B SaaS

SaaS Capital has assessed several thousand B2B SaaS businesses over the past 15+ years, and we have lent to and monitored the monthly financial and operational performance of over 130 such firms. Over time, we have seen many changes to what the market calls “SaaS,” but we believe that the fundamentally stable essence of SaaS is this:

Enterprises continually need to learn, manage, document, describe, and improve various processes. They adopt systems to do so. Those systems must capture the ground truth about the reality of those business processes, and they must adapt to changes in how the industry operates. Since those systems cannot all be core to a given enterprise’s mission, by the principle of comparative advantage, every enterprise will find benefit by having someone else manage at least some of those systems.

In 1926, those systems comprised endless paper forms, flow charts, and an army of diligent clerks. In 1976, they might have been written in COBOL and FORTRAN and stored on tapes. And in 2026, they are by and large SaaS products, written into data stores in AWS or Azure. Then, as now, the parties that specialize in delivering those systems will almost always present a better value proposition than for an enterprise to invent and develop such systems in-house.

What is common among all such systems is that they must be actually true (not imputed, estimated, or hallucinated); persistent (stored for the useful or legally required life of the information); and flexibly adaptive (to deal with the ever-changing outside world).

In 2024-2025, we watched as AI began to have effects on new borrower prospects, as well as our portfolio and their customers. Some companies seemed to have a tailwind from AI; others a headwind.

How We Chose the Three “Chicago” Dimensions

In the fall of 2025, we convened an offsite in Chicago with our investment team. In preparation for the event, we reviewed our portfolio, including identifying those companies that seemed to have faced the most challenges recently, as well as those that fared best. We reflected upon what we had been seeing in the market from new entrants as well. Using a mix of qualitative facilitation techniques and quantitative approaches such as value voting, we identified 10-12 distinct factors that we believe contributed most to the relative well-being of the companies we observed. Upon grouping and narrowing down, we developed the three candidate Rating Dimensions that we believe are close to the “principal components” of B2B SaaS AI risk as we understand it. We address each of these below.

SoR: The characteristic of being a System of Record (SoR) is present in many (though not all) SaaS businesses. We have observed that, all else equal, this is one of the strongest motivators for customer retention and a natural fit with our view of what SaaS actually is good at. AI synthesizes existing information, but does not create new truth. Therefore, systems that capture and house the ground truth about a business’s processes – provided those systems are effective, cost-efficient, and an elegant fit for the users – have excellent resistance to being displaced by AI.

NSC: Our Chicago offsite session yielded, at first, a large set of seemingly incoherent aspects of competitive advantage, each of which was relatively rare within our portfolio. The “aha” moment for us was realizing that each of these was some capability not inherent to pushing bits and bytes that a company provided in a privileged fashion to its customers. We call these Non-Software Complements (NSC), and they are present in some but not all SaaS businesses. Strictly speaking, NSCs make a SaaS company less purely Software-as-a-Service, and we admit that in years past, particularly with respect to mixed hardware/software business models, we viewed that as a downside. Our perspective has changed. Any application that works by synthesizing or analyzing pure informational inputs and outputs now faces the challenge of justifying its independent existence: otherwise, an AI “super-app” eventually subsumes it. We believe there is still a place for certain kinds of pure software, but it will be the exception, not the rule, and market participants should be clear-eyed about this new reality.

U&U: We chose User and Usage (U&U) based upon some internal research we had conducted over the past five years. That usage showed us that almost always, increasing Annual Contract Value (ACV) correlates with improvements across the business: growth rate, sales efficiency, gross margin, net revenue retention, and profitability. Selling something more valuable allows you to create more value for the customer and capture more value for the vendor – but it also requires that you do those things as well. It’s impossible long-term to capture $1 of value if you aren’t creating a multiple of that value. And in turn, it’s more feasible to create high value by improving or facilitating big-dollar processes, or high-stakes decisions by highly compensated executives. It’s also true that, optics or fairness be damned, top executives are far more likely to cut budgets – headcount, but also tooling such as SaaS – for the lower ranks before they cut the tools and services they personally touch.

Internally Testing the Rating Dimensions

Prior to releasing our Framework publicly, we began to use an early version internally to structure our thinking during underwriting due diligence on potential borrowers. We needed an approach that would keep our thinking consistent, but that would also limit the time and nuance required (AI being one of very many financial and non-financial risk factors we need to work through to get to a prompt and decisive answer).

By requiring a 1 to 4 whole number answer, and by having only three dimensions, all ratings (and their average) must be biased in one direction or another. This prevents the “analysis paralysis” escape of giving a “middle of the road” rating. (There’s no way to get a 2.5 Mean Rating.)

Once we had applied the framework to ten or so companies, we developed a familiarity. After a few minutes reviewing a new company’s pitch, we were able to discern a meaningful Framework rating. We also trimmed down the Framework, dropping a candidate rating dimension (vertical industry risk to the business’s customers) because it proved too hard to objectively assess in a short time, and created “noise” in the data.

The Framework rating was rarely, on its own, dispositive of how to proceed with a deal. But in the case of marginal deals or “tough calls,” it often helped us reach clarity on why to lean into a risky situation, or why ultimately to avoid it. Although this was sometimes useful for companies with borderline financial metrics, it was also particularly useful in deciding how to interpret a performance narrative.

As an example, we saw one company that claimed it had “turned the corner” on customer retention with some product improvements and customer segmentation work. But when we applied the AI framework, it helped us decide that the new strategy was still not a strong bulwark against AI risk. We declined to proceed.

In another case, however, we reached the opposite conclusion: after seeing them move away from a legacy product (with declining retention) that scored very poorly on the framework, and toward a new, growing line of business that scored very well on the framework, we chose to proceed working with a borrower whose consolidated financial metrics may otherwise have seemed marginal. The Framework revealed a momentum of improvement in advance of realized retention metrics.

Externally Validating the Framework

Having used the framework for a few months internally, we were still hesitant to share it externally. SaaS Capital has a strongly data-driven reputation. Even if we had an internally useful “thinking hat,” was it appropriate to claim that others could or should use it meaningfully?

In order to see if the framework had applicability beyond our internal process, we decided to run it against the SaaS Capital Index. The SaaS Capital Index (SCI) is a curated set of publicly-traded SaaS companies, which we have been tracking and publicizing data about for over a decade. We monitor the SCI, which has ranged between 50-100 members over the years, for valuation, growth, and profitability metrics.

In February 2026, we sorted through the SCI to select those index members that were still publicly quoted, and for which public website and/or SEC filing descriptions of their business models were available. We codified our framework definitions of the Chicago Dimensions into precise, formal language (available in Definitions). And we put AI to work measuring AI.

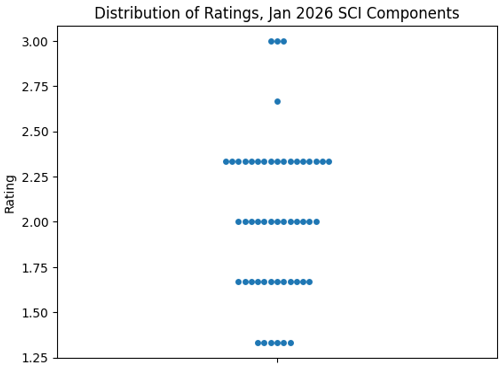

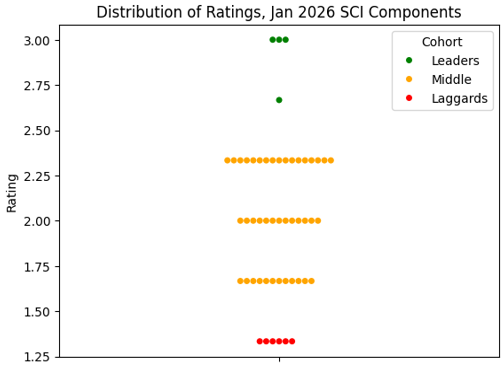

Using Claude Opus 4.5 Extended Reasoning, we had the AI research each of the SCI companies and provide a brief justification of a 1-to-4 integer rating on each. The result was 52 distinct Mean Ratings, ranging from a low of 1.33 to a high of 3.0. The distribution is shown below:

What jumped out at us from the graph are distinct sets of Leaders (2.66 to 3.0) and Laggards (1.33, none below), identifiable in the small handfuls at the top and bottom. We decided to examine these Leaders and Laggards to see if they differed from one another, and from the larger dataset, on any of the historical financial metrics we had tracked.

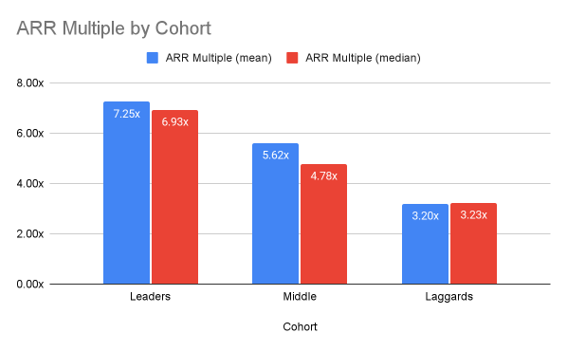

The results of that first analysis are below.

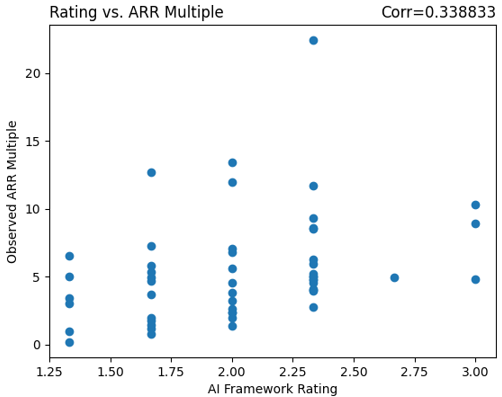

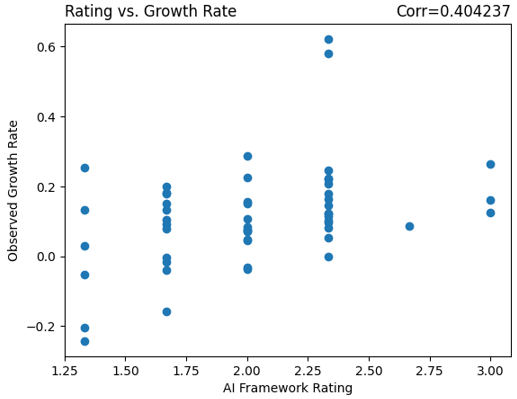

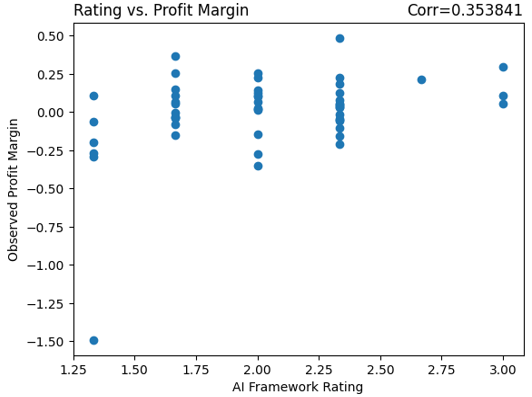

Further analysis of the data showed that this effect was, while most dramatic for the Leaders/Laggards, not limited to them alone. We observed reasonably strong and positive correlations with the AI Framework Rating for ARR Multiple (valuation), Growth Rate, and Profit Margin:

(We should caution the reader that the AI Framework Rating is not meant as a trading signal or a stock screen. Indeed, the reason that the ARR Valuation Multiple correlates positively with the Rating is likely because sophisticated investors have already arrived at a similar conclusion as to the AI risk and sustainability characteristics of those high-rated Leaders, and traded up their prices.)

Competitive Advantage as a Lender, or Why Share?

Once we were confident we had a useful, concise, and externally validated metric, the question arose: why would we share something that helps us to inform our lending? One approach to the matter might be to hide this information, in the hopes that it provides us with some “edge” to our underwriting that lets us better compete.

That is not how we see the world, however. For nearly 16 years, SaaS Capital has been a leader in researching and publishing benchmark data and metrics to aid in assessing the health of SaaS companies. We’ve helped SaaS operators and investors determine where they stand versus peers on everything from percentage of revenue spent on R&D, to what is a good Net Retention Rate for a given Gross Retention Rate, to the ultimate question of SaaS company valuation.

In truth, we as lenders are better served by better-informed SaaS operators. Not only because better-informed operators who approach us are more likely to self-select as a better fit for our financing model, but also because better-informed operators are more likely to build better, stronger SaaS companies.

Our ethos remains that we will continue freely to share our best, broadest insights in the faith that the trust thereby earned in the market over the long term outweighs any short-term gain from hoarding a glimmer of insight or data. This is not a high-frequency trading fund. We “buy and hold” our relationships with borrowers for 3-5 years or more.

B2B AI Risk Assessment Framework Instructions

- For each Rating Dimension below, rate the business from 1-4 in accordance with the Rating Guidelines.

- The Cheat Sheet table is provided as a quick reference, but the Definitions in the Rating Guidelines are authoritative.

- The Example Considerations are meant to possibly help the rater in thinking through the rating. Some, all, or none of the Example Considerations may be applicable to a particular business, or useful, given what is known by the rater.

- No zeros, nulls, or fractional points are permitted.

- The simple average (Mean Rating) is the result.

| System of Record | Non-Software Complement | User & Usage |

| SoR: The definitive “ground truth” stored about a customer’s operations. | NSC: Beyond just code and storage, access to hardware, data, marketplace, etc. | U&U: The seniority and monetary “stakes” of the customers’ user and usage. |

| 1: Not a SoR. Analysis / processing of data which “lives elsewhere,” or else conducts transactions which are recorded elsewhere. | 1: Pure software, no NSC. Customer provides the input, software processes it, and customer receives the output. | 1: Low-stakes decisions or transactions < $1k. Low-status individual contributors (ICs) use the product solo. |

| 2: SoR for low-value data. Primarily historical, comparative, or archival required data. | 2: Off-the-shelf or commodity integrations for convenience, efficiency, or economy. | 2: $10k decisions or transactions. Higher status ICs, or collaboration of ICs, or management users. |

| 3: SoR for strategically important, high-dollar value, or legally required data. | 3: Unique OR high value NSC. | 3: $100k decisions or transactions. VP-level management or exec users. |

| 4: SoR for mission-critical data; disappearance would halt revenue production and create existential risk. | 4: Unique AND high value NSC, e.g., real-time data; proprietary sensor hardware; irreplaceable network bind. | 4: Million-dollar transactions or bet-the-company decisions. C-suite exec users. |

Rating Dimension Definitions

System of Record (SoR)

Definition:

- A System of Record (SoR) is where a customer records the ground truth of some aspect of its operations.

- A SaaS product that is not itself a SoR, including if it merely analyzes ground truth that exists in another SoR, must get a 1.

- A SaaS product that, if it disappeared, would halt revenue production and create existential risk within days to weeks must get a 4.

- If deciding on whether to give a 2 or a 3, an SoR for an important truth, the retention and accuracy of which is incrementally helpful to brand, efficiency, or planning, should get a 2.

- An SoR for an important truth, the retention and accuracy of which is required by law or regulation, or which is a crucial component of profitable, competitive operations for a buyer, should get a 3+.

- In general, HR Ops, L&D, AP/AR, FP&A, and most Martech/Adtech must have an abnormally compelling case to merit above a 2.

Example Considerations:

- How urgent would an outage be? 4=Mission critical/production stoppage; 3=Service level disruption/production slowdown; 2=workflow interruption; 1=Planning change.

- How soon would a product outage affect a customer’s revenue or profit? 4=Within a day; 3=Within a week; 2=Within a month; 1=Within a year.

- What level of compliance risk does the SoR implicate? 4=License to operate/federal/state; 3=Insurance/financial covenant; 2=Partner/industry certification; 1=None/best practice.

Non-Software Complements (NSC)

Definition:

- A Non-Software Complement (NSC) within a SaaS product provides a crucial capability, external to the normal kind of code and data storage that characterize most SaaS applications.

- A product that can be entirely replicated or replaced by code, even if that code is “hard to write” or contains algorithmic complexity, must get a 1.

- Access to a proprietary external resource, such as a high-value proprietary and unique dataset, a closed trading marketplace, or a proprietary hardware/sensor network (not a commodity/open platform network) should get a 3+.

- Access to the customer’s own history of transactions is not considered an NSC (though it may be a SoR) and does not merit extra points.

- Access to a unique namespace that is trivially transferrable, such as a telephone number or domain name, does not, on its own, merit extra points.

- Analytical or predictive applications based upon a SaaS vendor’s history of like transactions, where that vendor history is proprietary and hard to replicate, may merit an extra point.

- Access through a product to one or more scarce network connections (e.g. telco SMS/MMS binds, credit card payment rails, FCC spectrum allocation) may merit extra points.

Example Considerations:

- What level of hardware integration is typically required along with the SaaS product? 4=Proprietary hardware; 3=Vendor provided, white label/COTS[1] hardware; 2=CPE[2] specialty hardware; 1=None, or common such as PC, browser, smartphone.

- What level of scarce network connectivity is provided through the SaaS product? 4=Exclusive, high value (e.g., NYSE, SWIFT, telco data binds); 3=Non-exclusive/medium value (e.g., data cooperative, commercial exchange); 2=Low-cost/commodity billed (SMS, telephony, payment rails, Forex); 1=None, unmetered/commodity bandwidth, public Internet.

- What level of vendor-provided data fusion is provided through the SaaS product? 4=Proprietary, perishable, high-value, non-retained[3]; 3=Proprietary, but not necessarily perishable, high-value, non-retained; 2=Proprietary, but historical, retained, replaceable, or low-value; 1=Public or None.

User and Usage (U&U)

Definition:

- User and Usage (U&U) aims to articulate how valuable the SaaS product and its user are to the customer organization.

- User refers to the actual person who logs in and uses a SaaS product, as well as the functional roles directly impacted by the product’s use and outputs.

- A product used personally and directly by CXOs merits extra points and may merit a 4.

- A product used by persons who support CXOs and where the CXO directly sees outputs (reports, etc.) merits an extra point.

- A product used by individual contributors, without a network or collaborative effect, generally must get a 1.

- Usage which drives “bet the company” decisions or multi-million-dollar transactions merits extra points.

- Usage which primarily affects decisions or transactions of less than $1000 generally must get a 1.

Example Considerations:

- What are the qualitative “stakes” of the decisions informed by the SaaS product? 4=Bet the company/job/year; 3=Bet the quarter; 2=Bet the month/quota; 1=Bet the transaction/lead.

- What are the quantitative “stakes” of the decisions informed by the SaaS product? 4=$1M+ per decision, 3=$100k per decision, 2=$10k per decision, 1=$1k per decision.

- What is the org chart level of the typical daily user of the SaaS product? 4=C/Board/SVP-Level; 3=VP/Sr Director-Level; 2=Manager Level; 1=IC Level.

- What is the annual comp level of the typical daily user of the SaaS product? 4=$300k+ comp; 3=$200k+ comp; 2=$100k+ comp; 1=Sub-$100k comp.

Application of the Framework

Design Objectives

A user should be able to assess a business in under 5 minutes with no more research than is typically available from a larger company’s public website and marketing materials, or from a smaller / startup company’s pitch deck.

A user should be able to compare ratings from several actual or contemplated businesses to stack-rank them from most to least medium-term AI risk.

Non-Objectives

The assessment dimensions and the overall rating are an ordinal, not interval or ratio measurement (2 is not “twice as good” or “half as risky” as 1).[4]

No consideration is given to execution, performance, size/scale, efficiency, financial condition or capitalization. These should be assessed outside of this framework.

The assessment does not apply outside of B2B subscription SaaS companies.

Additional Considerations

- The identity of the rater and the date the rating was done should be recorded as part of the record.

- Do not become bogged down “in the weeds” of the Rating Guidelines. It is far better to make a quick, informed guess, and produce an imprecise result, than to spend more than five minutes total on rating a business for the first time.

- If a first rating is done as a “quick, informed guess” and the rater later learns significantly more detail (such as via a due diligence process), it may be wise to update the rating with the benefit of greater familiarity.

- If multiple raters are working together, it is best to have each rater independently conduct the ratings and then average them.

- It is less preferable to have multiple raters work “by committee,” unless it is the only way to assemble the necessary perspective on the various dimensions.

Limitations and Weaknesses

- Framework applies only to Business-to-Business Software-as-a-Service models.

- The larger and more diverse a company’s lines of business are, the less reliable a single rating for the company will be. (This is why our definitions bias towards speaking about rating a “business” and not a “company.”)

- It is unwise to compare a rating on a business by one rater with a rating on another business by another rater. Comparison is really only valid when the same person (or mechanism, such as an AI rater) rates both compared businesses.

Using and Interpreting Ratings

- The best use of ratings is to compare more than one business, rated by the same rater(s) at the same time. This can also include scenarios of future plans for a single company.

- All ratings and risk indicators are best understood as relative to the group of businesses rated around the same time by the same rater(s).

- A group of rated businesses can be directionally sorted by Mean Rating, but very limited meaning should be ascribed to small (less than 1.0) differences in Mean Rating.

- If the framework is applied to a single business, not for comparison but for an absolute assessment:

- Mean Rating over 3.0: excellent and should be considered lower risk.

- Mean Rating under 2.0: poor and should be considered higher risk.

[1] Commercial-off-the-Shelf; that is, effectively commodity.

[2] Customer Premises Equipment; that is, not vendor-provided.

[3] “Retained” data may be downloaded and kept by the SaaS vendor’s customer; non-retained data is only usable within the context of the SaaS product itself.

[4] See e.g. https://en.wikipedia.org/wiki/Level_of_measurement

Our Approach

Who Is SaaS Capital?

SaaS Capital® is the leading provider of long-term Credit Facilities to SaaS companies.

Read MoreSubscribe